Note: Proofread any scripts before using. Always try scripts on a test instance first. This Blog is not responsible for any damage.

Part - C

33. Managing or Modifying SCAN in Oracle RAC?

- To add a SCAN VIP resource:

$ srvctl add scan -n cluster01-scan

- To remove Clusterware resources from SCAN VIPs:

$ srvctl remove scan [-f]

- To add a SCAN listener resource:

$ srvctl add scan_listener

$ srvctl add scan_listener -p 1521

- To remove Clusterware resources from all SCAN listeners:

$ srvctl remove scan_listener [-f]

34. How to change Cluster interconnect in RAC?

On a single node in the cluster, add the new global interface specification:

$ oifcfg setif -global eth2/192.0.2.0:cluster_interconnectVerify the changes with oifcfg getif and then stop Clusterware on all nodes by running the following command as root on each node:

# oifcfg getifAssign the network address to the new network adapters on all nodes using ifconfig:

# crsctl stop crs

#ifconfig eth2 192.0.2.15 netmask 255.255.255.0 broadcast 192.0.2.255Remove the former adapter/subnet specification and restart Clusterware:

$ oifcfgdelif -global eth1/192.168.1.0

# crsctl start crs

35. How to change Public or VIP Address in RAC Cluster?

Modifying the VIP or VIP Hostname of a 10g Oracle Clusterware Node

Reference Notes:Note 283684.1 How to Change Interconnect/Public Interface IP Subnet in a 10g Cluster

For complete srvctl syntax on 10gR1, Refer to Appendix B of the RAC Admin Guide:

http://download-west.oracle.com/docs/cd/B13789_01/rac.101/b10765/toc.htm

Changing the VIP involves modification of the nodeapps, which includes the Virtual IP address, the GSD, the Listener, and Oracle Notification Services (ONS). The VIP can be modified while the nodeapps are running, however changes will not take effect until the VIP, and hence the nodeapps, are restarted.

Depending on the version of Oracle Clusterware that you are running, other resources on a node, such as database instances and ASM instances, are dependent on the VIP, so stopping the nodeapps may cause other resources to be stopped – therefore, this change should be made during a scheduled outage.

In most cases, changing Data Centers or IP addresses within a Data Center will already incur an outage for other reasons, because changes need to be made at the operating system level, and servers may even need to be moved – so there is most likely a scheduled outage for this type of maintenence already.

However, in some cases – for example, if a VIP IP address was perhaps mistyped in DNS and needs to be corrected, you may be able to minimize the downtime by only modifying a single node. Also, as of 10.2.0.3 and higher versions, the ASM instance and DB instances no longer depend on the VIP, so it is possible to stop the nodeapps without bringing down the ASM instance or DB instance – but client connectivity is limited while the VIP is offline.

Verifying Current VIP configuration

1. The first step that should be done is to confirm the current configuration of the VIP. This is most easily accomplished by running the command:

srvctl config nodeapps -n -aC:>srvctl config nodeapps -n node1 -a

VIP exists.: /node1-v/168.148.44.94/255.255.255.0/Public

These outputs show that:Any of the above configuration parameters associated with the VIP can be changed if they were originally input incorrectly, or perhaps they need to be changed due to external reasons (such as a data center move, or IP change as mentioned above).

The VIP Hostname is ‘node1-v’

The VIP IP address is ’168.148.44.94′

The VIP subnet mask is ’255.255.255.0′

The Interface Name used by the VIP is called ‘Public’

Note:

In addition, you can also verify the IP address and interface of the VIP by running the ‘ifconfig’ command or ‘ifconfig -a’, and looking for the IP address associated with an interface ending in ‘:1′. The example below, on Linux, shows the interface as ‘eth0:1′

=============================

eth0:1 Link encap:Ethernet HWaddr 00:01:03:2C:69:BB

inet addr:192.168.2.125 Bcast:192.168.2.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

…

…

=============================

On the Windows platform, run the command ‘ipconfig /all’. This will list all IP addresses bound to a given adapter, including the VIP, but it will not be obvious just from this output which one is the VIP address and which is the actual host IP address. Therefore, using ‘srvctl config nodeapps -n -a’ is the surest bet.

2. Once you have determined what the current configuration of the nodeapps is, be sure to make note of what values will remain unchanged, and what the correct/new values that you need will be. For example, if you are just changing the “Interface Name” you will need to know what the correct interface name is, but you also need to note the VIP hostname, IP address and subnet mask to ensure that this information is re-entered correctly.

Stopping Resources

3. Once you are ready to make the change, stop all resources that are dependent on the VIP on a given node. This includes all RAC database instances on that node, as well as the ASM instance on that node, if it exists and is being used (Note that starting with 10.2.0.3, the dependency between the VIP and the database and ASM instances has been removed):

3a). Stop database instances:4. Next, stop the nodeapps using srvctl – i.e:

$ srvctl stop instance -d grid -i grid1

Where the database name is specified via the ‘-d’ option, and the instance on the appropriate node is specified with the ‘-i’ option.

Alternatively, to stop the entire database, on all nodes, you can issue the stop database command:

$ srvctl stop database -d grid

3b). To stop the ASM instance on the node, issue the following command:

$ srvctl stop asm -n node1

This command should be issued for each ASM instance using the appropriate node name. Alternatively, it is also possible to stop these resources via SQL*Plus and/or, on the Windows platform by stopping the associated services.

$ srvctl stop nodeapps -n node1This will stop the VIP, GSD, Listener, and ONS daemon currently running on the nodes specified

Optional you can disable them not to restart just in case

srvctl disable database -d grid5. Verify that the VIP is no longer running by executing the ‘ifconfig -a’ or ‘ipconfig /all’ command again, and confirm that the IP address is no longer listed as running in the output.

srvctl disable asm -n node1

srvctl disable nodeapps -n node1

etc.

If the interface still shows as online, this may be an indication that a resource which is dependent on the VIP is still running. The crs_stat command can help to show resources that are still online.

Change in hosts file

6. To make the actual modification to the nodeapps, the Oracle Clusterware stack must be up on the node where you are running srvctl from. To modify the nodeapps use the ‘srvctl modify nodeapps’ command with the following syntax:

srvctl modify nodeapps -n [-o ] [-A ]

Options Description:

-n Node name.

-o Oracle home for the cluster database.

-A The node level VIP address (/netmask[/if1[|if2|...]]).

$ srvctl modify nodeapps -n node1 -A 192.168.2.125/255.255.255.0/eth0

It should be noted that for the first parameter, you can specify either the hostname associated with the VIP, or the IP address associated with the VIP. Either way, the srvctl command will actually attempt to resolve the IP to a hostname, or the hostname to an IP, and it will store both entries in the OCR. So, assuming that the virtual hostname of ‘node1-v’ resolves to an IP address 192.168.2.125, the below command would have the same effect as the command using the IP address:

$ srvctl modify nodeapps -n node1 -A node1-v/255.255.255.0/eth0Note that the interface names are case sensitive on all platforms. On some platforms, such as Windows, the Interface Name may have spaces in it – i.e. “Local Area Connection 1″. If that is the case, you must enclose the interface name in double quotes – i.e.

srvctl modify nodeapps -n node1 -A 192.168.2.125/255.255.255.0/”Local Area Connection 1″On Unix and Linux systems, this command should be run as root. Attempting to run this command as the ‘oracle’ user or software owner will result in the following error:

PRKO-2117 : This command should be executed as the system privilege user.

On Windows systems, this command should be run as the user who did the original installation. This account should be an account with Local Administrator privileges on each node.

7. After making the change, you should verify that it is correct by re-running

‘srvctl config nodeapps -n -a’Double-check the output to confirm that all parameters are correct.

Starting Resources Back Up

8. Once the modify nodeapps has been executed, you can re-start node-level applications via srvctl with the following syntax:

srvctl start nodeapps -n

i.e.: $ srvctl start nodeapps -n rnode1

If any resources (such as database or ASM) were previously disabled, then they should now be re-enabled and re-started as well.

Repeat the same steps for all the nodes in the cluster. Since SRVCTL is a cluster wide management tool, you can accomplish these tasks for any specific nodes from one node, without the need to login individually to each of the cluster nodes.

Note: If only the IP address is changed, it should not be necessary to make changes to the LISTENER.ORA and TNSNAMES.ORA, provided they are using the vip hostnames for

the ‘HOST=’ entries.

If changing both the hostname and the VIP for a node, it will be necessary to modify the LISTENER.ORA and change the ‘HOST=’ entries to the new VIP hostname. This can be done manually, or by using the NETCA to reconfigure the listener. In addition, changes may need to be made to the TNSNAMES.ORA of any clients connecting to the old HOSTNAME.

In addition to modifying the nodeapps after an IP address or network change of some type, it may also be necessary to modify the networks that are stored in the OCR. If that is the case, please refer to the following note:

Note 283684.1 How to Change Interconnect/Public Interface IP Subnet in a 10g Cluster

36. What is runfixup.sh script in Oracle Clusterware 11g release 2 installation

With Oracle Clusterware 11g release 2, Oracle Universal Installer (OUI) detects when the minimum requirements for an installation are not met, and creates shell scripts, called fixup scripts, to finish incomplete system configuration steps. If OUI detects an incomplete task, then it generates fixup scripts (runfixup.sh). You can run the fixup script after you click the Fix and Check Again Button.

The Fixup script does the following:

If necessary sets kernel parameters to values required for successful installation, including:

- Shared memory parameters.

- Open file descriptor and UDP send/receive parameters.

- Sets shell limits if necessary to required values.

37. What is the difference between TAF and FAN & FCF? at what conditions you use them?

1) TAF with tnsnames

a feature of Oracle Net Services for OCI8 clients. TAF is transparent application failover which will move a session to a backup connection if the session fails. With Oracle 10g Release 2, you can define the TAF policy on the service using dbms_service package. It will only work with OCI clients. It will only move the session and if the parameter is set, it will failover the select statement. For insert, update or delete transactions, the application must be TAF aware and roll back the transaction. YES, you should enable FCF on your OCI client when you use TAF, it will make the failover faster.

Note: TAF will not work with JDBC thin.

2) FAN with tnsnames with aq notifications true

FAN is a feature of Oracle RAC which stands for Fast Application Notification. This allows the database to notify the client of any change (Node up/down, instance up/down, database up/down). For integrated clients, inflight transactions are interrupted and an error message is returned. Inactive connections are terminated.

FCF is the client feature for Oracle Clients that have integrated with FAN to provide fast failover for connections. Oracle JDBC Implicit Connection Cache, Oracle Data Provider for .NET (ODP.NET) and Oracle Call Interface are all integrated clients which provide the Fast Connection Failover feature.

3) FCF, along with FAN when using connection pools

FCF is a feature of Oracle clients that are integrated to receive FAN events and abort inflight transactions, clean up connections when a down event is received as well as create new connections when a up event is received. Tomcat or JBOSS can take advantage of FCF if the Oracle connection pool is used underneath. This can be either UCP (Universal Connection Pool for JAVA) or ICC (JDBC Implicit Connection Cache). UCP is recommended as ICC will be deprecated in a future release.

4) ONS, with clusterware either FAN/FCF

ONS is part of the clusterware and is used to propagate messages both between nodes and to application-tiers

ONS is the foundation for FAN upon which is built FCF.

RAC uses FAN to publish configuration changes and LBA events. Applications can react as those published events in two way :

- by using ONS api (you need to program it)

- by using FCF (automatic by using JDBC implicit connection cache on the application server)

you can also respond to FAN event by using server-side callout but this on the server side (as their name suggests it)

Relationship between FAN/FCF/ONS

ONS –> FAN –> FCF

ONS -> send/receive messages on local and remote nodes.

FAN -> uses ONS to notify other processes about changes in configuration of service level

FCF -> uses FAN information working with conection pools JAVA and others.

38. Can you add voting disk online? Do you need voting disk backup?

Yes, as per documentation, if you have multiple voting disk you can add online, but if you have only one voting disk , by that cluster will be down as its lost you just need to start crs in exclusive mode and add the votedisk using

crsctl add votedisk <path>

39. What are server pools in 11gr2?

Introduction to server pool, (the real meaning of ‘g’ in the oracle’s advertisement since 10g)

Very long post, take free time to read and to get a clear understanding…..

Grid computing is a concept within Oracle database which has been there since 10g. The basic meaning of grid computing is to divide the hard coupling of the availability of a resource over the machines thus letting the resources be available on a ‘wherever and whenever’ kind of basis. This means that there shouldn’t be a restriction on a said resource which must be present on a specific machine itself or can only be accessed from a specific machine. The very same concept is enhanced in 11.2 RAC with the introduction of Server Pools.

Server Pools allow the same functionality of logically dividing the cluster into small segments that can be used for varying workloads. But unlike the traditional mechanism available up to 11.1, which only allows this task by choosing the instances to run on nodes as Preferred & Available and running services using the same architecture, server pools offer a much larger list of attributes which help in the management of resources in a rather simple and transparent way. In server pools, the underlying hosts of the cluster are added (and removed) from the pools much more on-the-fly, and take the burden off the DBA’s shoulders for this task. With just a few parameters to take care of, the whole stack becomes much easier to govern and administer, while still being completely transparent yet powerful to manage all the types of different resources in the RAC clusterware environment, especially when the number of the nodes go beyond two digits.

Server Pool: is managed by the cluster

- Logical division of the cluster into pools of servers

- Applications (including databases) run in one or more server pools

- Managed by crsctl (applications), srvctl (Oracle)

- Defined by 3 attributes (min, max, importance) or a defined list of nodes Min- minimum number of servers (default 0)

- Max – maximum number of servers (default 0 or -1)

- Importance – 0 (least important) to 1000

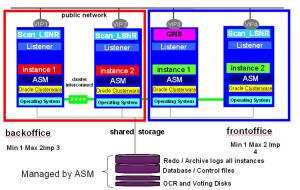

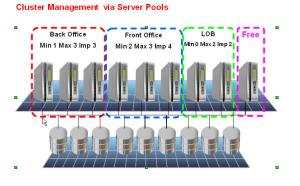

- Consider this diagram,

In this example we have a 4 node cluster and a server pool named back office to run a database. So whatever servers are in that server pool will run an instance of that server pool. This is seen by the red boxes with two instances in the server pool. There is a front office database where another database is running. It has two instances running in it. One for each server in the server pool. Cluster resources can run on any node in the cluster independent of any of the server pools defined in the cluster.

Now services that are used for our oracle databases work slightly different when working with a policy managed environment. When we create a service it can only run in a server pool. So a service can only be defined in one server pool. Where as a database can be run in multiple server pools. So services are uniform (run on all instances in the pool) or singleton (runs on only one instance in the pool). If it is a singleton service and that instance fails we will fail over that service to another instance in the server pool.

Assigning Servers in the Server Pool:

Servers are assigned in the following order:

- Generic server pool

- User assigned server pool

- Free

- Fill all server pools in order of importance until they meet their minimum

- Fill all server pools in order of importance until they meet their maximum

- By Default any left over go into FREE

In the example above: There are three server pools. Note the importance front office has the higher importance so two servers are assigned to it first. The Back office is assigned its minimum of one server and if there are any left over LOB is assigned a server and the rest go into the free pool. Hear we have assign max’s identified. So if there are available servers in Free back office and front office will be able to grow to their maxs.

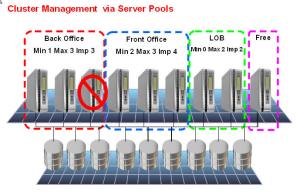

What happens if a server fails.

Lets say in backup office a server goes down. Well we have one in free. So the server would be moved out of free into back office and an instance would be started on that server.

If a server leaves the cluster Oracle Clusterware may move servers from one server pool to another only if a server pool falls below its min. It chooses the server to move from:

- A server pool that is less important.

- A server from a server pool of the same importance which has more servers than its min

Check the status of server pools using crsctl

[grid@host01 ~]$ crsctl status serverpool -pCheck the server pools using srvctl

NAME=Free

IMPORTANCE=0

MIN_SIZE=0

MAX_SIZE=-1

SERVER_NAMES=

PARENT_POOLS=

EXCLUSIVE_POOLS=

ACL=owner:grid:rwx,pgrp:oinstall:rwx,other::r-x

NAME=Generic

IMPORTANCE=0

MIN_SIZE=0

MAX_SIZE=-1

SERVER_NAMES=host01 host02 host03

PARENT_POOLS=

EXCLUSIVE_POOLS=

ACL=owner:grid:r-x,pgrp:oinstall:r-x,other::r-x

NAME=ora.orcladm

IMPORTANCE=1

MIN_SIZE=0

MAX_SIZE=-1

SERVER_NAMES=host01 host02 host03

PARENT_POOLS=Generic

EXCLUSIVE_POOLS=

ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r–

NAME=sp1

IMPORTANCE=2

MIN_SIZE=1

MAX_SIZE=2

SERVER_NAMES=

PARENT_POOLS=

EXCLUSIVE_POOLS=

ACL=owner:grid:rwx,pgrp:oinstall:rwx,other::r

[grid@host01 bin]$ srvctl config serverpool -g Free

Server pool name: Free

Importance: 0, Min: 0, Max: -1

Candidate server names:

[grid@host01 bin]$ srvctl config serverpool -g Generic

PRKO-3160 : Server pool Generic is internally managed as part of administrator-

managed database configuration and therefore cannot be queried directly via srvpool

Note:- The MIN_SIZE attribute specifies the cardinality of the resources (database etc) suppose if you have min_size 2, the database instances can run on the two servers in the serverpool. So here its transparent as you are not specifying srvctl add instance –d –i –n etc.

Another Important note:- Adding server pools to clusterware using crsctl (caveat:- using crsctl for adding serverpool will work for non-database resources such as application server etc, for database resources if you are creating serverpool use srvctl instead Read doc here)

For non-database resources,Adding child pools to the serverpools (parentpools)

[grid@host01 ~]$ crsctl add serverpool sp1 -attr "MIN_SIZE=1, MAX_SIZE=1, IMPORTANCE=1"

[grid@host01 ~]$ crsctl add serverpool sp2 -attr "MIN_SIZE=1, MAX_SIZE=1, IMPORTANCE=2"

For database resources create like this

[grid@host01 ~]$ srvctl add srvpool -g sp1 -l 1 -u 2 -i 999 -n host02

[grid@host01 ~]$ srvctl add srvpool -g sp2 -l 1 -u 2 -i 999 -n host03

Note:- Observer the difference, you cannot specify the individual or your wish hosts when using crsctl but when using srvctl you can

[grid@host01 ~]$ crsctl status server -f

NAME=host01

STATE=ONLINE

ACTIVE_POOLS=Free

STATE_DETAILS=

NAME=host02

STATE=ONLINE

ACTIVE_POOLS=sp2

STATE_DETAILS=

NAME=host03

STATE=ONLINE

ACTIVE_POOLS=sp1

STATE_DETAILS=

Host01 is assigned to free pool and rest of the two hosts.

[grid@host01 Disk1]$ crsctl add serverpool sp_child1 -attr "PARENT_POOLS=sp1,

MIN_SIZE=1, MAX_SIZE=1, IMPORTANCE=2"

In order to effectively use the serverpools aka host resources segregation as stated in the starting of this post, oracle has changed the database options, while using dbca in RAC installation you might found the following screen.

From 11G Release 2 Database when you run DBCA , the third screen in the sequence have this for real application clusters (RAC) installation, Configuration type, Admin or policy managed. so What are they?

Administrator Managed Databases:- (as of now)

Traditionally Oracle had defined or had the DBA’s define which instances run on which servers in a RAC environment. They would clearly define that node1 would run RAC1, and node2 would run RAC2, etc….. Those instances would be tied to those nodes. This is known as Administrator Managed Databases because the instances are being managed by the DBA and the DBA has specifically assigned an instance to a server.

Policy Managed Databases:- (going forward)

In a Policy Managed Databases the DBA specifies the requirements of the database workload. IE. How many instances do we want to run in this workload – Cardinality of the database. With this specified Oracle RAC will try to keep that many instances running for that database. If we need to expand the size all we need to do is expand the cardinality. As long as there are that many servers in the cluster the cluster will keep that many up and running.

- The goal behind Policy Managed Databases is to remove the hard coding of a service to a specific instance or service.

- The database can be associated with a server pool rather than a specific set of nodes. It will decide the minimum and maximum no. of servers needed by that resource (database , service, third party application).

- The database will run on the servers which have been allocated to the serverpool it has been assigned to. (uses min_size to determine where it has to run and how may servers it has to run)

- Since servers allocated to the server pool can change dynamically, this would allow Oracle to dynamically deliver services based on the total no. of servers available to the cluster.

- The database will started on enough servers subject to the availability of the servers. We need not hardcode the servers a database should run on.

- Any instance of the database can run on any node. There is no fixed mapping between instance number and node.

- As servers are freed/added/deleted, they will be allocated to existing pools as per the rules mentioned earlier.

- Example:- In theory

DB1 is defined with a minimum of 4 and a maximum of 6 with an importance of 10,

- DB2 is defined with a minimum of 2 and maximum of 3 and an importance of 7, and

- DB3 is set to a minimum of 2 and maximum of 3 with an importance of 5.

- Initially the 8 nodes could be configured as nodes 1-4 allocated to DB1, nodes 5-6 allocated to DB2 and nodes 7-8 allocated to DB3. If node 3 failed for some reason, the system would allocate node 7 or 8 to DB1 because it has a higher importance than DB3 and a minimum requirement of 4 servers, even though it would cause DB3 to fall below the minimum number of servers. If node 3 is re-enabled it would be allocated immediately to DB3 to bring that database back to its minimum required servers.

- If a 9th node were added to the cluster, it would be assigned to DB1 because of the importance factor and the fact that DB1 has not yet hit its maximum number of servers.

To check that a database is admin-managed or policy-managed, we can use the command SRVCTL like below:

[grid@host01 Disk1]$ srvctl config database -d orcladmSo we can see that the database ORCLADM is Admin Managed. To convert this database to Policy managed, you can proceed as follows:

Database unique name: orcladm

Database name: orcladm

Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1

Oracle user: oracle

Spfile: +FRA/orcladm/spfileorcladm.ora

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools: orcladm

Database instances: orcladm1,orcladm2 Disk Groups: FRA

Services:

Database is administrator managed

[grid@host01 Disk1]$ srvctl stop database -d orcladmHere sp1 is a server pool over which the database ORCLADM would be running and we can confirm it using the same command SRVCTL: last line say’s that this database is now policy managed

[grid@host01 Disk1]$ srvctl modify database -d orcladm -g sp1

[grid@host01 Disk1]$ srvctl config database -d orcladmBut how to control, the services pointing to specific nodes of the serverpool? by using –c option in srvctl add service.

Database unique name: orcladm

Database name: orcladm

Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1

Oracle user: oracle

Spfile: +FRA/orcladm/spfileorcladm.ora

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools: sp1

Database instances:

Disk Groups: FRA

Services:

Database is policy managed

Finally, creating RAC services, First look at the table below for adding a service using srvctl,

srvctl add service -h

Did you see the options serverpool, uniform, singleton, this two options states the service nature, for policy based databases you will not specify the available and preferred instances, instead you will opt for above two. (See last column)

For example:-

If you want to create a service “all” that should run in sp1 (serverpool) on all nodes. the command should be

srvctl add service –d orcladm –s all –g sp1 –c uniform …If you want to create a service “one” that should run in sp1(serverpool) on only one node, the command should be

srvctl add service –d orcladm –s all –g sp1 –c singleton ..Note above I haven’t specified any available or preferred node as orcladm is no more admin managed DB.

Some other notes to DBA,

SIDs are DYNAMIC

- DBA scripts may have to be adjusted

- Environment variables settings in profiles will have to check the current sid

- Directories for LOG files will change over restarts

- Forces Oracle Clusterware to maintain the same

- Node number when restarted (which maintains SID) Automatically done on upgrades

- Required when running pre-11g Release 2 versions in the cluster

- On upgrade, the nodes will be “pinned”

crsctl pin css -n nodename

40. What is Load balancing & how does it work?

Oracle RAC: Load Balancing advisory concepts

Oracle 10gR2 provides an advanced load balancing method to overcome the imbalance of RAC logon’s , especially when there is logon storms, the listeners on the two nodes will simply distribute them evenly across both the nodes. Some case one of the node can be busier than the other . The LBA will calculate how much work each instance in the clustered database has been asked to do. The LBA will then make sure that any new incoming work is routed to the instance(s) that are least busy. This determination takes place on a service-by-service basis across all instances that are providing resources to each service.

The LBA considers the following factors when determining how to perform this “balancing act”:

- Are there any differences in processing power between nodes?

- Are there any sessions that are currently blocked from execution because of waits?

- Have any failures occurred on a node that might block processing from continuing?

- Are there any services that are competing for resources, and have those services been granted a different priority to complete their tasks at hand?

As part of its Fast Application Notification (FAN) event transmission features, Oracle 10g RAC uses Oracle Notification Services (ONS) to communicate the status of cluster resources to all participating node applications in the cluster. In Oracle 10gR2, FAN added a new event type, SERVICE_METRIC, whose event payload contains

information about the relative workload of each node in the RAC cluster, and it’s this information that the Load Balancing Advisory uses to determine how to route new connections throughout the clustered database’s instances.

See sample PMON Trace file here for Metrics

Oracle 10gR2 supplies an upgraded DBMS_SERVICE.MODIFY_SERVICE procedure that modifies an existing RAC service so that it can utilize the Load Balance Advisory for service-by-service load balancing. This procedure provides two crucial parameters, GOAL and CLB_GOAL

Command Reference:-

BEGINNow look at each option,

DBMS_SERVICE.MODIFY_SERVICE(

service_name => ‘ADHOC’

,aq_ha_notifications => TRUE

,goal => DBMS_SERVICE.GOAL_NONE

,clb_goal => DBMS_SERVICE.CLB_GOAL_LONG );

DBMS_SERVICE.MODIFY_SERVICE(

service_name => ‘DSS’

,aq_ha_notifications => TRUE

,goal => DBMS_SERVICE.GOAL_SERVICE_TIME

,clb_goal => DBMS_SERVICE.CLB_GOAL_SHORT

);

DBMS_SERVICE.MODIFY_SERVICE(

service_name => ‘OLTP’

,aq_ha_notifications => TRUE

,goal => DBMS_SERVICE.GOAL_THROUGHPUT

,clb_goal => DBMS_SERVICE.CLB_GOAL_SHORT );

END;

/

GOAL

In addition, the CLB_GOAL parameter provides yet another load balancing method: It tells the LBA how to perform connection load balancing. See Table 2 for a list of its acceptable enumerated constant values and the impact of setting either.

Depending on the settings for these two parameters, the LBA uses the METRICS shown in Table 3 to make its determination of which instance should receive the next set of incoming connections:

Further, the LONG goals can be called as connect time load balancing and SHORT goals called as Runtime load balancing.

Scripts:-

Script to Verify the services and load balancing metrics details

SET PAGESIZE 50

SET LINESIZE 11O

TTITLE 'Services Configured to Use Load Balancing Advisory (LBA) Features|

(From DBA_SERVICES)'

COL name FORMAT A16 HEADING 'Service Name' WRAP

COL created_on FORMAT A20 HEADING 'Created On' WRAP

COL goal FORMAT A12 HEADING 'Service|Workload|Management|Goal'

COL clb_goal FORMAT A12 HEADING 'Connection|Load|Balancing|Goal'

COL aq_ha_notifications FORMAT A16 HEADING 'Advanced|Queueing|High-|Availability|Notification'

SELECT

name

,TO_CHAR(creation_date, 'dd-mm-yyyy hh24:mi:ss') created_on

,goal

,clb_goal

,aq_ha_notifications

FROM dba_services

WHERE goal IS NOT NULL

AND name NOT LIKE 'SYS%'

ORDER BY name

/

TTITLE 'Current Service-Level Metrics|(From GV$SERVICEMETRIC)'

BREAK ON service_name NODUPLICATES

COL service_name FORMAT A08 HEADING 'Service|Name' WRAP

COL inst_id FORMAT 9999 HEADING 'Inst|ID'

COL beg_hist FORMAT A10 HEADING 'Start Time' WRAP

COL end_hist FORMAT A10 HEADING 'End Time' WRAP

COL intsize_csec FORMAT 9999 HEADING 'Intvl|Size|(cs)'

COL goodness FORMAT 999999 HEADING 'Good|ness'

COL delta FORMAT 999999 HEADING 'Pred-|icted|Good-|ness|Incr'

COL cpupercall FORMAT 99999999 HEADING 'CPU|Time|Per|Call|(mus)'

COL dbtimepercall FORMAT 99999999 HEADING 'Elpsd|Time|Per|Call|(mus)'

COL callspersec FORMAT 99999999 HEADING '# 0f|User|Calls|Per|Second'

COL dbtimepersec FORMAT 99999999 HEADING 'DBTime|Per|Second'

COL flags FORMAT 999999 HEADING 'Flags'

SELECT

service_name

,TO_CHAR(begin_time,'hh24:mi:ss') beg_hist

,TO_CHAR(end_time,'hh24:mi:ss') end_hist

,inst_id

,goodness

,delta

,flags

,cpupercall

,dbtimepercall

,callspersec

,dbtimepersec

FROM gv$servicemetric

WHERE service_name IN ('OLTP','DSS','ADHOC')

ORDER BY service_name, begin_time DESC, inst_id

/

RAC: Client & Server connection Load balancing in Oracle

Oracle from 10g onwards (9i as well) has two features to provide the connection load balancing

Client Side load balancing (also called as connect time load balancing)

Server side load balancing (also called as Listener connection load balancing)

Client Side load balancing:- Oracle client side load balancing feature enables clients to randomize the connection requests among all the available listeners based on their load.

An tns entry that contains all nodes entries and use load_balance=on (default its on) will use the connect time load balancing or client side load balancing.

Sample Client Side TNS Entry:-

finance =Server side load balancing:- This improves the connection performance by balancing the number of active connections among multiple instances and dispatchers. In a single instance environment (shared servers), the listener selects the least dispatcher to handle the incoming client requests. In a rac environments, PMON is aware of all instances load and dispatchers , and depending on the load information PMON redirects the connection to the least loaded node.

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac2-vip)(PORT = 2042))

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac1-vip)(PORT = 2042))

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac3-vip)(PORT = 2042))

(LOAD_BALANCE = yes)

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = FINANCE) (FAILOVER=ON)

(FAILOVER_MODE = (TYPE = SELECT) (METHOD = BASIC) (RETRIES = 180) (DELAY = 5))

)

)

In a shared server configuration, a listener selects a dispatcher in the following order:-

- 1. Least Loaded node

- 2. Least loaded instance

- 3. Least loaded dispatcher for that instance

- 1. Least loaded node

- 2. Least loaded instance

Sample Tns entry should be in an instances of RAC cluster,

local_listener=LISTENER_MYRAC1TNSNAMES.ORA entries: Below entries should be there on all nodes tnsnames.ora file.

remote_listener = LISTENERS_MYRACDB

LISTENERS_MYRACDB = (ADDRESS_LIST =Listener route the incoming connections to least loaded nodes based on the load profile statistics that are dynamically updated by PMON. The more updates the busier the node, Updates can occur from 3 secs to 10 mins frequency and can be visible in listener log as follows:-

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac1-vip)(PORT = 2042))

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac3-vip)(PORT = 2042))

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac2-vip)(PORT = 2042))

)

LISTENER_MYRAC3 =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac3-vip)(PORT = 2042))

)

LISTENER_MYRAC2 = (ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac2-vip)(PORT = 2042))

)

LISTENER_MYRAC1 =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = myrac1-vip)(PORT = 2042))

)

16-SEP-2012 01:22:58 * service_update * DEVPRD1 * 0Further you can trace the pmon for load balancing updates from the listener

Place 10257 level 16 event and restart the instance and check pmon trace file.

Trace contents

kmmgdnu: MYRACDB goodness 27As you see above the node load increases from 687 to 847 and the the process from 65 to 70

kmmgdnu: MYRACDB goodness=27, delta=1, flags=0×4:unblocked/not overloaded, update=0×6:G/D/-

kmmlrl: 65 processes kmmlrl: node load 687 kmmlrl: instance load 35

kmmlrl: nsgr update returned 0

flags=0×4:unblocked/not overloaded, update=0×6:G/D/-

kmmgdnu: MYRACDBXDB

goodness=0, delta=1,

flags=0×5:unblocked/not overloaded, update=0×6:G/D/-

kmmgdnu: MYRACDB goodness=27, delta=1, flags=0×4:unblocked/not overloaded, update=0×6:G/D/-

kmmlrl: 66 processes

kmmlrl: node load 775

kmmlrl: nsgr update returned 0

kmmgdnu: MYRACDB

goodness=28, delta=1,

flags=0×4:unblocked/not overloaded, update=0×6:G/D/-

kmmlrl: 70 processes kmmlrl: node load 847 kmmlrl: nsgr update returned 0

*** 2012-09-16 03:47:26.891

err=-319 tbtime=0 etime=319 srvs=1 nreqs=0 sreq

‘Node load’ gives an indication of how busy the machine is. By default, ‘node load’ is the primary factor for determining where new connections will go.

Hope its clear about two Connection load balancing features in Oracle,

In this post you have understood,

1) Client & server side load balancing with normal database tns connection strings

2) How pmon get updated the load information

No comments:

Post a Comment